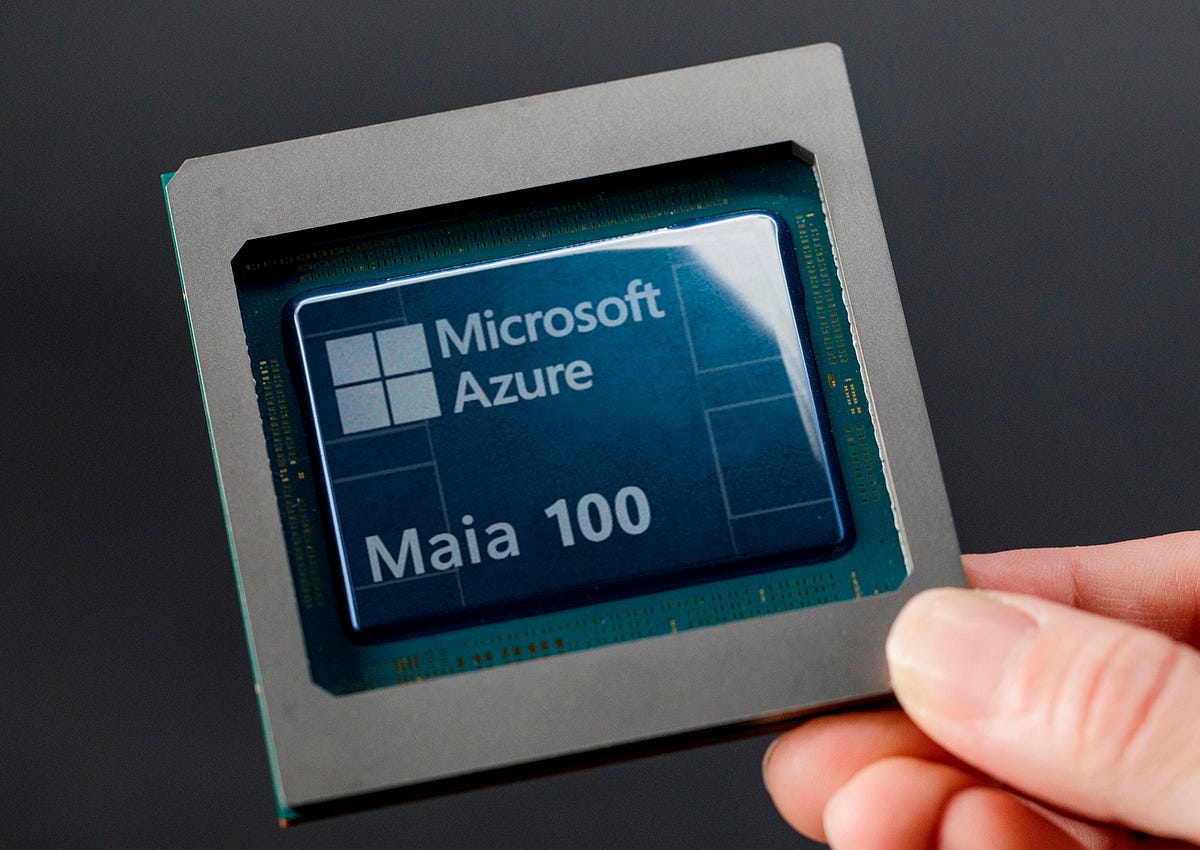

Microsoft’s Bold Move: The AI Chip That Could Reshape Cloud Computing

The tech world rarely sleeps, but Microsoft’s latest announcement has sent shockwaves through Silicon Valley that even the most jaded industry observers couldn’t ignore. The Redmond giant has pulled back the curtain on a proprietary AI chip that promises to fundamentally alter the balance of power in cloud computing—a market currently dominated by a fierce triumvirate of Microsoft itself, Amazon Web Services, and Google Cloud.

This isn’t just another incremental hardware update. Microsoft’s entry into custom silicon for artificial intelligence workloads represents a calculated strike at the heart of its competitors’ advantages, potentially redrawing the entire landscape of enterprise AI deployment.

Why Custom Silicon Matters Now

To understand the magnitude of this development, we need to appreciate the current state of AI infrastructure. For years, companies building AI systems have relied heavily on graphics processing units (GPUs) from NVIDIA, which has enjoyed a near-monopoly in AI training and inference chips. This dependence created a bottleneck—both in terms of supply and cost—that has frustrated every major cloud provider.

Amazon recognized this vulnerability early, developing its own Graviton processors for general computing and Inferentia chips for AI inference. Google has been running its Tensor Processing Units (TPUs) since 2016, giving it a proprietary advantage for machine learning workloads. Microsoft, despite its massive Azure cloud platform and leadership in AI through partnerships with OpenAI, has been notably absent from the custom chip conversation until now.

That absence just ended with dramatic flair.

What Makes Microsoft’s Approach Different

While details about the specific architecture remain closely guarded, what we know about Microsoft’s strategy reveals a different philosophy than its competitors. Rather than simply creating a NVIDIA alternative, Microsoft appears to be designing silicon optimized specifically for the types of large language models and generative AI systems that have captured the world’s imagination over the past two years.

The company’s deep collaboration with OpenAI provides a unique advantage here. Microsoft has been running GPT-4, DALL-E, and countless other cutting-edge models at massive scale through Azure. This real-world experience with the most demanding AI workloads in existence has given Microsoft’s chip designers invaluable insights into exactly what tomorrow’s AI infrastructure needs to deliver.

Consider the economics at play. Running ChatGPT for millions of users isn’t just technically challenging—it’s extraordinarily expensive. Every query, every generated image, every conversation consumes computational resources that translate directly to energy costs and hardware expenses. A chip that can perform these operations even twenty or thirty percent more efficiently could save hundreds of millions of dollars annually while simultaneously enabling new capabilities that current hardware makes cost-prohibitive.

The Threat to Amazon and Google

For Amazon Web Services, which has built its empire on being the reliable, cost-effective backbone of the internet, Microsoft’s chip represents a direct challenge to its fundamental value proposition. If Azure can offer superior AI performance at competitive prices, enterprises evaluating where to build their AI infrastructure face a much more complicated decision.

Amazon has certainly invested in its own silicon, but the company’s chips have primarily focused on general-purpose computing and more traditional machine learning tasks. The explosive growth of generative AI—a category that barely existed in meaningful commercial form three years ago—may have caught AWS’s silicon roadmap slightly off-guard.

Google faces a different kind of threat. The company pioneered custom AI chips and has arguably the most advanced in-house silicon expertise of the big three cloud providers. However, Google’s TPUs have been optimized for Google’s own needs and research priorities. Microsoft’s advantage lies in its position as the primary infrastructure provider for OpenAI, whose models have defined the current generation of commercial AI applications.

If Microsoft’s chips are specifically tuned for the transformer architectures and attention mechanisms that power GPT-style models, they could offer meaningful advantages for the vast majority of enterprises now racing to implement generative AI into their products and workflows. Google’s technical excellence matters less if customers are optimizing for a different kind of workload.

Beyond the Cloud Wars

The implications extend far beyond which cloud provider wins the most enterprise contracts this quarter. Custom AI chips from all three major providers signal a fundamental shift in how the technology industry approaches innovation.

For decades, the industry operated on a model where specialized companies—Intel for CPUs, NVIDIA for GPUs—created general-purpose chips that everyone used. The hyperscalers buying those chips competed on software, services, and operational efficiency. That model is crumbling. When your competitive advantage depends on AI capabilities, controlling the full stack from silicon to software becomes not just desirable but essential.

This vertical integration creates both opportunities and concerns. On the positive side, chips designed specifically for AI workloads should be more efficient, powerful, and cost-effective than general-purpose alternatives. Innovation should accelerate as hardware and software teams work in tight coordination rather than across corporate boundaries.

The darker possibility is a future where AI capabilities become increasingly locked into specific cloud platforms. If Microsoft’s chips only work optimally with Azure, Google’s only with Google Cloud, and Amazon’s only with AWS, enterprises face reduced portability and increased vendor lock-in. The open, interoperable cloud ecosystem that allowed businesses to move workloads between providers could fragment into incompatible islands.

What This Means for Developers and Enterprises

For organizations building AI-powered products, Microsoft’s chip announcement demands attention. The choice of cloud provider has always mattered, but the performance and cost characteristics of custom AI silicon could make that decision even more consequential.

Companies heavily invested in the OpenAI ecosystem—using GPT-4 for customer service, Claude for analysis, or DALL-E for creative work—may find Azure with Microsoft’s custom chips offers unbeatable performance. Those building on Google’s Gemini models might find Google Cloud with TPUs remains their best option. The era of cloud-agnostic AI deployment may be ending before it truly began.

Developers will need to pay closer attention to the hardware their code runs on. Optimizing for specific silicon architectures, once the domain of only the most performance-critical applications, may become standard practice for anyone building AI features at scale.

The Road Ahead

Microsoft’s AI chip doesn’t guarantee victory in the cloud wars, but it eliminates a significant disadvantage and potentially creates a meaningful edge. The company that once missed the mobile revolution and stumbled in its first cloud efforts has positioned itself at the forefront of the AI revolution.

Amazon and Google won’t stand idle. Expect announcements of their own AI chip advances, deeper optimizations, and aggressive pricing to maintain their positions. The competition benefits everyone building with AI—better performance, lower costs, and faster innovation.

But make no mistake: the game has changed. The age of proprietary AI infrastructure has arrived, and Microsoft just announced it’s playing to win.

The Ethics of Terraforming: Can Humans Rewrite a Planet’s Biosphere? | Maya